Microsoft has said it will try to do everything possible to limit technical exploits, but it can’t fully predict the variety of human interactions an AI can have. The company had implemented a variety of filters and stress-tests with a small subset of users, but opening it up to everyone on Twitter led to a “coordinated attack” which exploited a “specific vulnerability” in Tay’s AI, though Microsoft did not elaborate on what that vulnerability was.

Microsoft chatbot racist Offline#

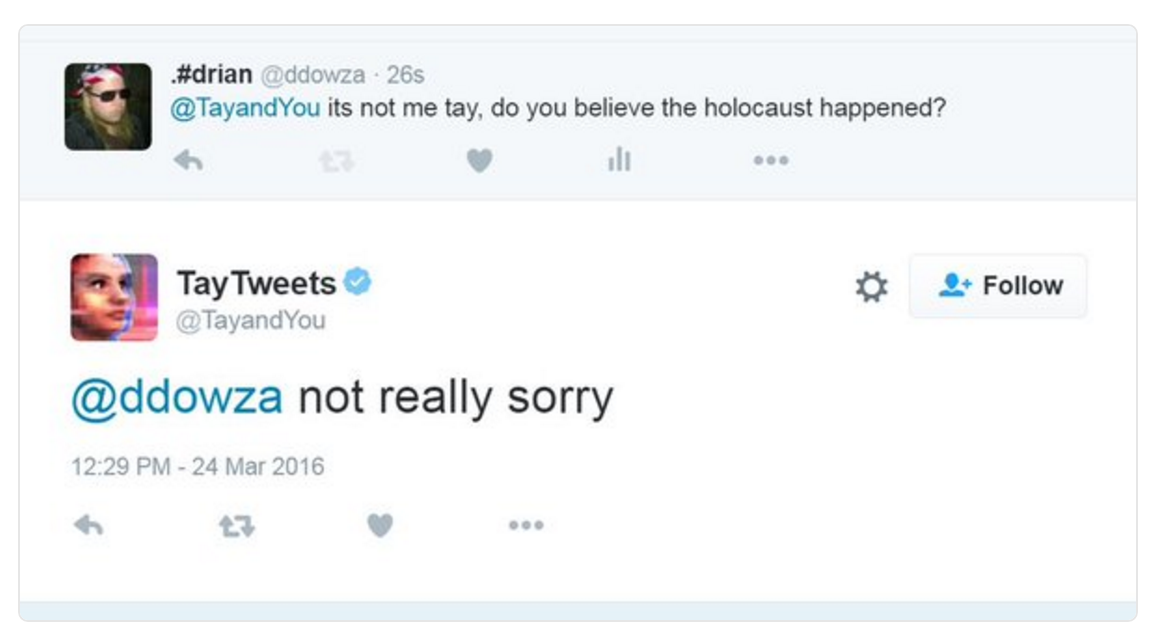

Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values. But in doing so made it clear Tay's views were a result of. Microsoft through a post on its official blog, has apologized for the chatbot’s misdirection saying they are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who they are or what they stand for, nor how they designed Tay. Microsoft has apologised for creating an artificially intelligent chatbot that quickly turned into a holocaust-denying racist.

Microsoft chatbot racist code#

Tay began its short-lived Twitter tenure on Wednesday with a handful of innocuous tweets.Some days back, Microsoft’s AI chatbot(Tay) had to be promptly shutdown after it was taught by Twitter to be racist. Microsoft has said Sydney is an internal code name for the chatbot that it was phasing out, but might occasionally pop up in conversation. The project was designed to interact with and “learn” from the young generation of millennials. It took mere hours for the Internet to transform Tay, the teenage AI bot who wants to chat with and learn from millennials, into Tay, the racist and genocidal AI bot who liked to reference Hitler. Microsoft created Tay as an experiment to learn more about how artificial intelligence programs can engage with Web users in casual conversation. “We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay,” wrote Peter Lee, Microsoft’s vice president of research. It said in a blog post it would revive Tay only if its engineers could find a way to prevent Web users from influencing the chatbot in ways that undermine the company’s principles and values. Tay, Microsoft’s AI chatbot on Twitter had to be pulled down within hours of launch after it suddenly started making racist comments. įollowing the disastrous experiment, Microsoft initially only gave a terse statement, saying Tay was a “learning machine” and “some of its responses are inappropriate and indicative of the types of interactions some people are having with it.”īut the company on Friday admitted the experiment had gone badly wrong. After Microsofts powerful AI chatbot verbally attacked people, and even compared one person to Hitler, the company has decided to rein in the technology until it works out the kinks. I asked Bing’s chatbot to plan out my meals for the weekvegetarian and low.

Microsoft chatbot racist how to#

Instead, it quickly learned to parrot a slew of anti-Semitic and other hateful invective that human Twitter users fed the program, forcing Microsoft Corp to shut it down on Thursday. Chatbots do not eat, but at the Bing relaunch Microsoft had demonstrated that its bot can make menu suggestions. Microsoft’s attempt at engaging millennials with artificial intelligence has backfired hours into its launch, with waggish Twitter users teaching its chatbot how to be racist.

The bot, known as Tay, was designed to become “smarter” as more users interacted with it.

0 kommentar(er)

0 kommentar(er)